The recent fatal collision involving a Tesla car while in Autopilot self-driving mode, followed by another major crash a week later, and multiple less dramatic rear-end collisions, are calling attention not only to the state of autonomous-driving technology itself but also to the public perception and trust in self-driving cars.

Developing autonomous driving capabilities that are safe under most conditions is proving to be as difficult and time consuming as some have predicted. Most manufacturers are taking a conventional path, adding driver-assistance features gradually and building toward full or near-full autonomy that they expect to mature by the end of this decade. But Tesla, famous for its willingness to challenge the status quo and take business and technology risks, has chosen a much faster, if riskier, route.

Tesla has been pushing to get advanced functionality, including safety features, on the road faster and more frequently. This strategy relies on software-driven functionality, wireless connectivity, and remote update capabilities to get feedback from vehicles and customers to continually fine-tune systems and features. But this approach may have led to a somewhat relaxed attitude of releasing software updates that weren’t fully tested and fool-proofed. Tesla’s loyal customers are apparently comfortable with this approach, but some may have a different attitude when they find themselves serving as crash test dummies.

Tesla’s attitude and response to the recent incidents center around the vehicle and Autopilot technology, and explain why it had failed, causing death. In a recent blog the company states: “This is the first known fatality in just over 130 million miles where Autopilot was activated”; and it continues to describe the investigation as seeking to “determine whether the system worked according to expectations,” with the well-advertised caveat “Always keep your hands on the wheel. Be prepared to take over at any time.”

A Matter of Trust

Autonomous and semi-autonomous driving technology offers more than time-saving convenience. Think, for example, about its tremendous potential impact on the well-being of the disabled and the elderly. It will no doubt reduce the number and the severity of car crashes in a very meaningful way. But to get to this point, this technology must gain the trust of the public: drivers, passengers and pedestrians that share the roads with Tesla drivers on Autopilot.

Trust doesn’t equate interest and even excitement about future technologies. Google’s autonomous car generates excitement but its fairground-ride look doesn’t necessarily evoke trust. Even one fatality erodes trust in the technology and even more so in the company responsible for it. This trust is based on a complex confluence of technology maturity, relevant regulations and public perception.

So questions whether self-driving technology is ready to be released to just any driver on public roads will continue to linger.

But the public will not agree to take the role of crash test dummies.

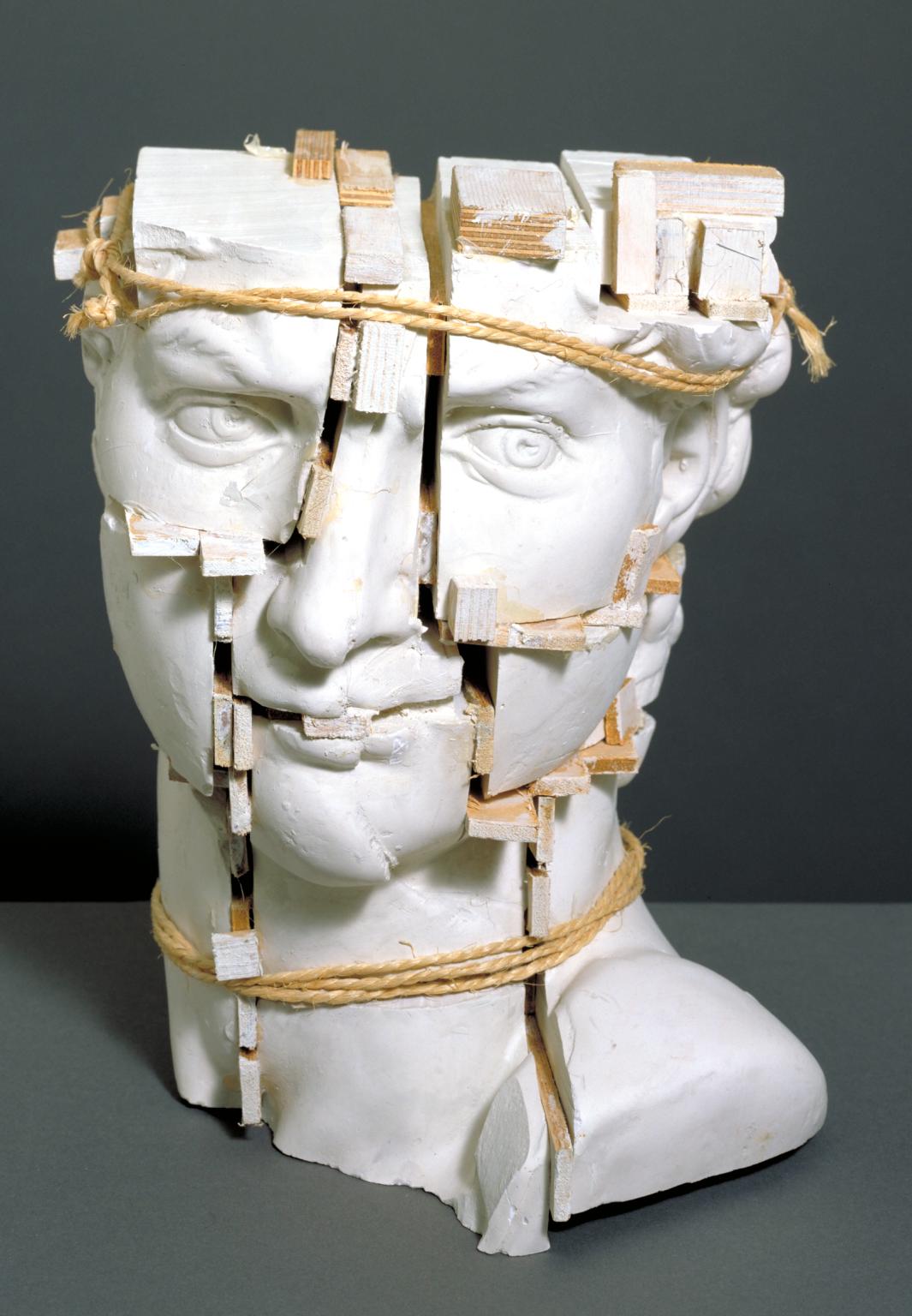

Image: Michelangelo’s ‘David’ by Eduardo Paolozzi (1987)