I returned recently from a series of conference keynotes and lectures across 10 time zones. It is clear that the infatuation technology vendors and the media has with sophisticated-sounding technology terms is as strong as ever.

Fancy technology terms such as artificial intelligence (AI) and machine learning conjure up a spectrum of AI-based movie characters from the inanimate HAL in 2001: A Space Odyssey to the seductive feminine humanoids Samantha in Her and Ava in Ex Machina.

Even technology experts and software vendors that should know better, don’t miss any opportunity to add “machine learning algorithms” to their product descriptions whenever they can.

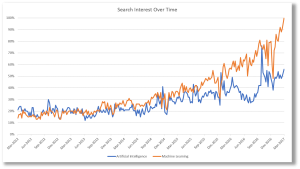

The graph on the right shows the change in the frequency of Google searches for the terms artificial intelligence and machine learning in the U.S. over the past 5 years.

Many, if not the majority, of these fanciful systems do not employ AI technology. Take, for instance, the “first toothbrush with artificial intelligence” debuted at this year’s Consumer Electronics Tradeshow (CES) in Las Vegas. Not to debate the possible health benefits of encouraging kids to develop better oral hygiene habits through games and weekly progress reporting, the argument that these are achieved using “patented deep learning algorithms” are patently dubious.

And there are endless other examples of using inflated terms to attract readers and consumers in which data collection and reporting are flaunted as “machine learning,” and “AI-based prescriptive analytics” algorithms are not much more sophisticated than a simple collection of if-this-then-that rules.

Another favorite fashion accessory in corporate communication and product descriptions de jour is “predictive,” as in predictive analytics and predictive maintenance. While some predictive systems might, indeed, employ sophisticated statistical and pattern analysis algorithms, many others are mere synonyms to busy and colorful data dashboards.

This is not simply a matter of advertisers using inflationary language to describe product capabilities. Many systems making claims to using artificial intelligence to solve complex technical tasks previously handled by humans lack the basic performance characteristics of AI: they aren’t self-directed, especially when dealing with uncertainties. They are not fully task-aware and self-aware to allow them to learn and improve over time on their own.

The fancy terms and lofty promises will inevitably lead to exacerbated disappointments, when these systems, often quite impressive in simple prototype demonstrations, are unable to handle the diversity, complexity, and scale of real-world applications.

But at the end of the day, what matters is the outcome, not the algorithm. Non-AI systems can perform tasks that trick us to believe they are AI not by mimicking human behavior but by offering powerful tools to extract insights and provide valuable advice. Statistical analyses, case-based reasoning, IFTTT and other methods can provide significant value, even though they may not be representing “deep machine learning” technologies.

This is how these systems need to be evaluated. The value thy deliver. Not based on inflated promises of sophisticated-sounding terms.

P.S. The title of this post was inspired by an unrelated idea introduced by the witty and entertaining Victor Borge (it’s a classic!)