We expect much from the autonomous cars of the future.

We want them to be better drivers than us humans. We want them to handle driving tasks effectively and safely, avoiding fatalities, injuries, and property damage. And we expect these them to be a significant factor in reducing car crashes that kill 1.35 million people every year, more than half of whom are pedestrians, motorcyclists, and cyclists.

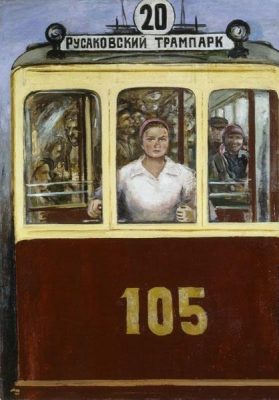

And when faced with complex driving situations, we want the autonomous car of the future to maneuver them gracefully and in a manner accepted by society. We continue to debate the intricacies and myriad variations of the Trolley Problem and the details of the technology we expect will one day deliver the appropriate behavior.

And only when cars can demonstrate these abilities will we agree to use them.

But are we setting the right goals and benchmarks? Should the trolly problem be the gold standard of good, safe, and acceptable driving?

Instead of debating the consequences and ethical merits of decisions made by an autonomous vehicle, let’s turn some of these questions to you, the reader:

- How often do you face a situation of the kind depicted by the trolley problem? Were you ever forced to make a split-second decision prioritizing life and death outcomes? Do you know anyone who did?

- Did the driver’s education course you took in high school teach you how to make this kind of decisions?

We usually expect technology to perform better than humans in the tasks it was designed for. Whether bookkeeping software, automated bank cashiers, or assembly line robots, we allow technology to take over human activities and skills because we believe that by doing so we improve the accuracy, efficiency, and quality of work and, at the same time, enhance our standard of living.

Therefore, it is only natural that we expect autonomous vehicles to perform better than humans. But are setting the benchmark too high? Is the trolly problem dilemma so infrequent that we should not use it as a performance benchmark and erect an unnecessary barrier to adoption? Should we lower expectations and still see benefits sooner?

On the other hand, we may not be ready to accept mistakes made by autonomous cars. We have learned to accept mistakes made by human drivers. We educate and punish repeat offenders and those found to be grossly negligent. But will we have the same attitude when judging autonomous driving technology? And who do we hold accountable and punishable? The manufacturer? The software designer?

Final note. This article is not intended as an argument for or against autonomous driving technology. Rather, it adds a dimension to a very complex subject.